Hello! Long time, no scripting! I’ve been blowing through VCF, deploying, redeploying, and built some scripts to help me with this. Sharing is caring, read on to see what I’ve done…

VSAN

Building a home lab – Part 1 – Starting with CPU, Motherboard, PCIe Lanes and Bifurcation

Before we get started, a little info about this post

At a high level, I need to install five (5) PCIe NVMe SSDs into a homelab server. In this post I cover how CPU & motherboard all play a role in how & where these PCIe cards can and should be connected. I learned that simply having slots on the motherboard doesn’t mean they’re all capable of the same things. My research was eye-opening and really helped me understand the underlying architecture of the CPU, chipset, and manufacturer-specific motherboard connectivity. It’s a lot to digest at first, but I hope this provides some insight for others to learn from. Before I forget, the info below applies to server motherboards, too, and plays a key role in dual socket boards when only a single CPU is used.

Sometimes the hardest part of any daunting task is simply starting. I got some help from Intel here, though.

ESXi host stuck entering Maintenance Mode

Maintenance Mode task hangs

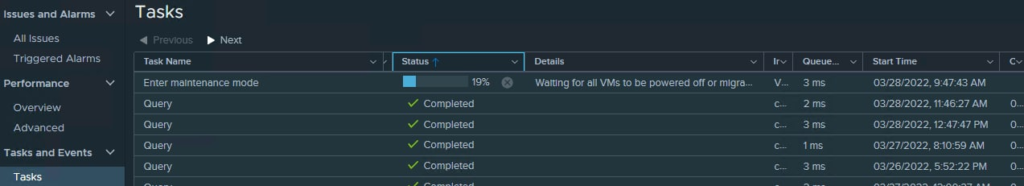

I told one of my nodes to enter maintenance mode and it sat for overnight like this:

That screenshot was taken almost exactly 26 hours later. There were no running VMs on the host, nothing on the local datastore, no resyncing or rebuilding objects in vSAN, and lastly nearly zero IO on the network adapters.

I tried canceling the task, it would not cancel.

I rebooted the host, it came back into the cluster with that task still running.

I rebooted my vCenter, and that finally killed the task.

Getting Started with VMware vSAN: Hybrid or All Flash?

Everyone hears about VMware’s Virtual SAN and how awesome it is. It’s a very compelling offering and is only overshadowed by their software defined networking solution NSX.

The biggest hurdle: how to get started.

The truth is it’s extremely simple to enable and start using, but that’s not the “getting started” I’m talking about. I wanted to cover off some things to think about when you’ve decided you’re going down the VSAN path.

How do you know how many IOPS to expect, or how much storage you will have or need, should you go hybrid or all flash, and what resiliency or protection options you have, and the impact of those.

First things first: Hybrid or All Flash?

Bug in VSAN 6.2: De-dupe scanning running on hybrid datastores

UPDATE

VMware has posted a KB about this, which I did not realize at the time of writing the blog. https://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2146267

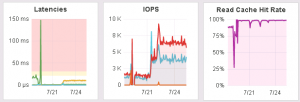

We’ve been testing out VSAN here at work and noticed that one of the clusters we rolled out had serious latency issues. We initially blamed the application running on the hosted VMs, but when it continued to get worse we finally opened a case with VMware. Here’s a chart of the kind of stats we were seeing (courtesy of SexiGraf):

Read latency in particular was very high on the datastore level, IOPS weren’t great, and Read Cache Hit Rate was low. We also saw that read and write latency was high on the VM level. After we opened a ticket with VMware, they discovered an undocumented bug in VSAN 6.2 where deduplication scanning is running even though deduplication is turned off (and actually unsupported in hybrid mode VSAN altogether). They provided the following solution:

For each host in the VSAN cluster:

1. Enter maintenance mode

2. SSH to the host and run: "esxcfg-advcfg -s 0 /LSOM/lsomComponentDedupScanType"

3. Reboot the host

After we applied the fix, the cluster rebalanced for a little while and came back looking much, much better. In the below graph, you can see right when the fix was applied and see read latency drop, IOPS increase, and read cache hit rate jump to the high 90-percents:

And for good measure, this is how it’s looked since:

So to summarize, if you are running hybrid VSAN 6.2, you should definitely check your latency and read cache hit rate. If you’re experiencing high latency and poor read cache hit rate, go through and change /LSOM/lsomComponentDedupScanType on all your hosts to 0. I can’t take credit for actually discovering this, so thank you to my coworker @per_thorn for tracking it down. And thank you @thephuck for letting me write it up on this blog!

VMware Virtual SAN Health failed Cluster health test

Here’s the error

While building a new environment for my lab, I ran across an interesting thing yesterday.

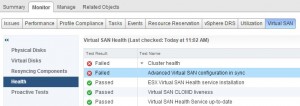

I looked at my cluster’s VSAN health and saw this error:

It’s complaining that my hosts don’t have matching Virtual SAN advanced configuration items.

If you click on that error, you’ll see at the bottom where it shows comparisons of hosts and the advanced configurations:

It shows VSAN.DomMaxLeafAssocsPerHost and VSAN.DomOwnerInflightOps as being different between a few of my hosts. Looking at the image above, you’ll see node 09 has values of 36000 and 1024, respectively, while the other nodes 10-12 show 12000 and 0.

I immediately went to the host configuration advanced settings in the web client, searched VSAN and don’t see either of those. I even checked through PowerCLI and can’t see those: