Before we get started, a little info about this post

At a high level, I need to install five (5) PCIe NVMe SSDs into a homelab server. In this post I cover how CPU & motherboard all play a role in how & where these PCIe cards can and should be connected. I learned that simply having slots on the motherboard doesn’t mean they’re all capable of the same things. My research was eye-opening and really helped me understand the underlying architecture of the CPU, chipset, and manufacturer-specific motherboard connectivity. It’s a lot to digest at first, but I hope this provides some insight for others to learn from. Before I forget, the info below applies to server motherboards, too, and plays a key role in dual socket boards when only a single CPU is used.

Sometimes the hardest part of any daunting task is simply starting. I got some help from Intel here, though.

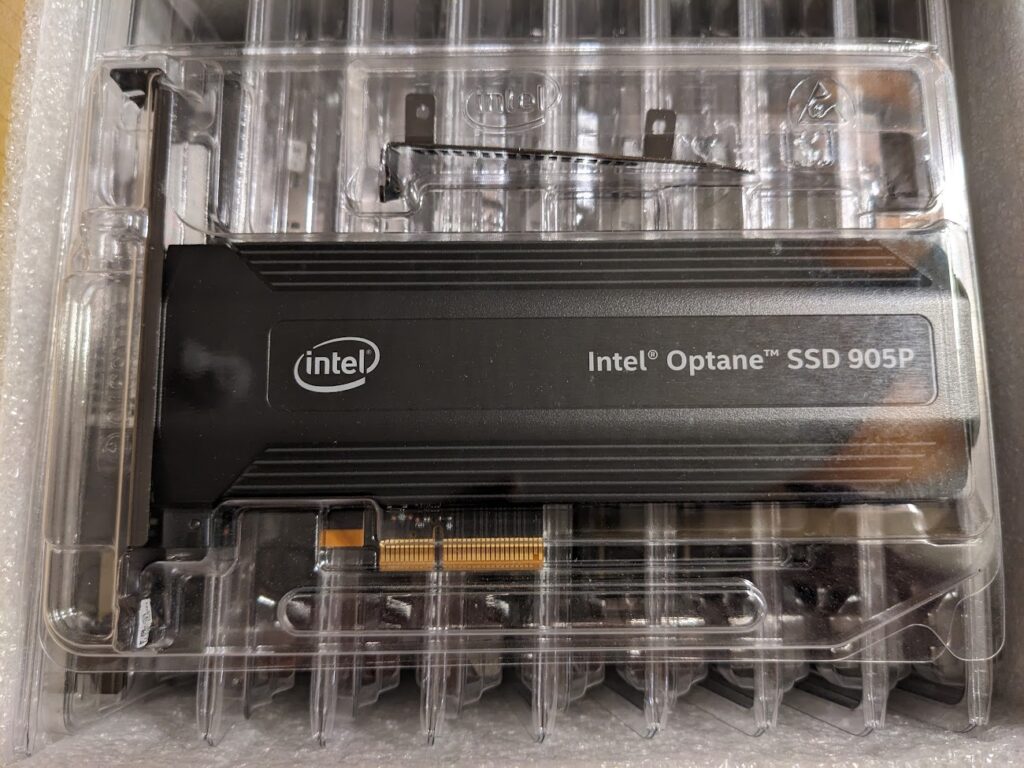

Through the vExpert program, I was lucky enough to be selected to receive some sample Optane disks from Intel. Since I was planning on building net-new home lab gear, I let them send whatever formfactor they wanted.

Early this month (January ’23) I received 10 Intel Optane SSD 905P, it was like Christmas all over again! The sample gear they sent were all PCIe 3.0 x4, so now the work begins to build a lab around these.

My plan is to build two machines in a 2-node vSAN cluster with the witness on either a NUC or one of my old mac minis. This will be a budget build home lab.

A couple things come to mind:

- These are PCIe x4 NVMe disks

- I need to find motherboards with several PCIe slots

- CPUs with enough PCIe lanes

- Power consumption

I’ve settled on the Intel Core i7-12700K proc, which has eight (8) performance cores and four (4) efficient cores. Yes, ESXi doesn’t technically support that, but there are workarounds for this. I chose this chip because it has integrated graphics, so I don’t need an additional card. AMD does have some procs with integrated graphics, but price pushed them above the i7-12700K.

I immediately began looking at motherboards and found one with five (5) PCIe x16 physical slots, and the CPU I was looking at has 20 PCIe lanes. If you divide that by 4 (because the Optanes are PCIe x4), that’s five (5), so in theory I could install five PCIe NVMe disks, but in practice it doesn’t work that way.

I was wrong, way wrong. Not all PCIe slots are created equal.

What does this mean exactly? Let’s take a look…

First, let’s clarify that a PCIe “lane” is a direct connection from a device to the CPU. When I say device, it could be anything from a GPU to a network adapter. In my case, it’s the Intel Optane NVMe SSD.

The motherboard and chipset actually determine where the lanes go. So while the CPU may have 20 PCIe lanes, it’s not as straight forward as having five x4 cards consuming those 20 lanes.

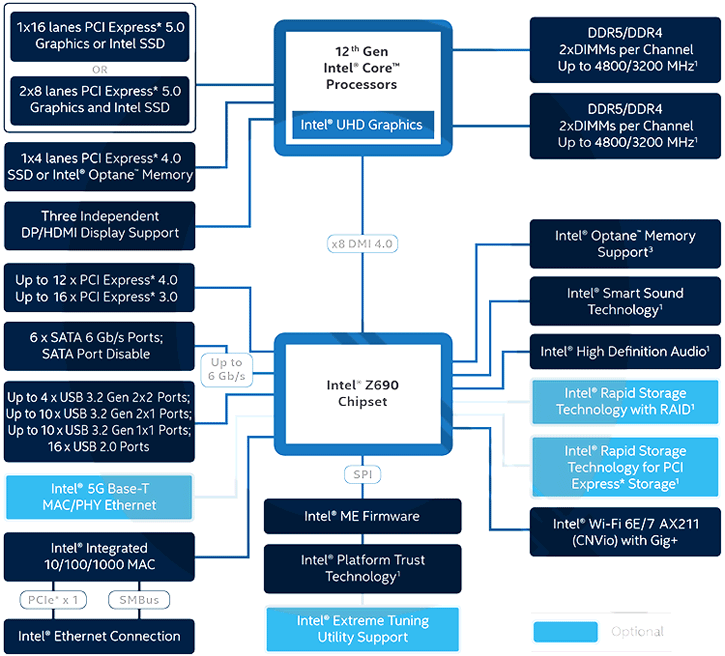

Let’s take a look at the Intel Z690 chipset, as it supports the i7-12700K CPU:

What is DMI? It’s Intel’s “Direct Media Interface” that connects the CPU to Intel’s Platform Controller Hub (PCH). You can substitute PCH for Chipset above, so when you read motherboard documentation about where slots or other integrations are connected, some may say PCH, some may chipset, they’re effectively saying the same thing.

So right off the bat, looking at the diagram you can see the top left says 1×16 lanes -OR- 2×8 lanes, and they’re PCIe Gen 5. That single connection has 16 lanes going to it. Typically that’s for your GPU. Then, the next block down shows 1×4 lanes, so that’s another 4 lanes, and these are Gen 4. We’re at 20 PCIe lanes right there. Both the Intel B660 chipset and the Z690 have 1×16 PCIe 5.0 and 1×4 PCIe 4.0 directly on the CPU, but the Z690 doubles the DMI lanes to the chipset.

I’m considering two different motherboards, the Asus TUF Gaming Z690 Plus D4 and the Gigabyte B660 DS3H AX DDR4.

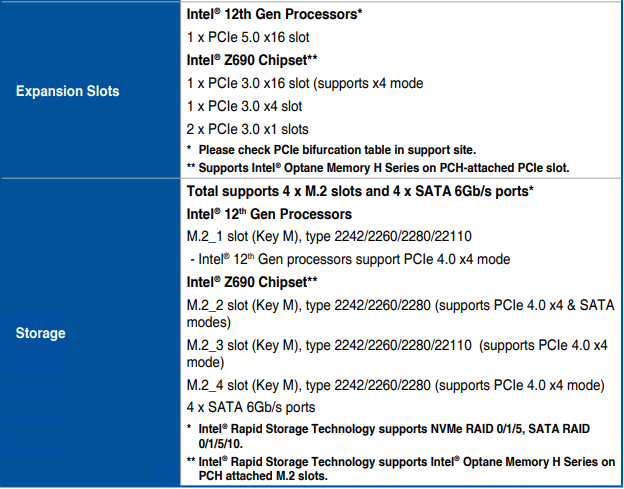

First, let’s take a look at the PCIe & storage slots on the Asus Z690:

First thing to note is that only one PCIe slot is directly connected to the CPU. The other PCIe slots go through the chipset, and thus across the x8 DMI lanes. Now, for storage, one of the M.2 slots is connected directly to the CPU over the remaining x4 PCIe 4.0 lanes, the other three on the chipset. There is an M.2 riser card that translates that M.2 slot into a PCIe x4 slot, so I can use that for this sample gear.

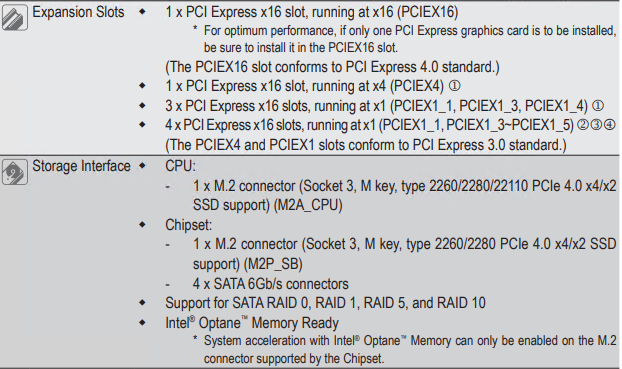

Now, here’s the Gigabyte B660:

The “AX” flavor of this motherboard is the (3) above, so it has one full x16 slot and four PCIe x16 physical slots electrically connected as PCIe x1. Electrically connected here refers to how the PCIe lanes are “cabled” to that physical slot. This is common to accommodate full-sized x16 cards, but may only have 1, 4, or 8 PCIe lanes actually connected to the physical slot. Why would they do that? You can run five GPUs in this box for mining, I suppose, and not have to get riser cards for PCIe x16 GPUs. However, it doesn’t call out which PCIe slots are connected where, but we can assume the ones called out as PCIe 3.0 are on the chipset. The M.2 slots are similar here, too, where one is on the CPU, but only one additional M.2 slot on the chipset.

So what do I do? Enter Bifurcation.

The generic definition of bifurcation is dividing something. Now, as it relates to this topic, it’s the splitting or dividing of a single PCIe x16 slot into multiple PCIe slots, usually x4 or x8. The key thing here is it’s motherboard dependent. Not all motherboards can do this, so RTFM!

Why am I saying this? The Asus manual for this motherboard doesn’t specifically say it supports bifurcation, but you can see in the chipset diagram it says one x16 lanes -OR- two x8 lanes. The Asus support page states “PCIe bifurcation in PCIe x16 slot” and “M.2 SSD quantity” as two (2), with a note that says “Please insert M.2 SSD Cards in Hyper M.2 X16 Card socket 1 & 3.” Now, that’s a bit wordy, but from what I gather, this x16 slot can bifurcate into only two x8 slots, hence saying two SSDs and the guidance to use slots 1 & 3 on the Hyper M.2 expander/riser card. A quick note about the Hyper M.2 card, it is an Asus PCIe x16 card with four (4) M.2 slots designed to support up to x4/x4/x4/x4 bifurcation. The reason you must skip slot 2 is because slots 1 & 2 are in one of the newly bifurcated x8 slots from the motherboard, and since the motherboard only does two x8s, you only have two usable slots, regardless if they’re x4 cards.

Now, let’s look at the Gigabyte motherboard’s bifurcation. I got the table above from the motherboard manual, but bifurcation isn’t mentioned there, it’s in the BIOS manual. It specifically says, “Allows you to determine how the bandwidth of the PCIEX16 slot is divided. Options: Auto, PCIE x8/x8, PCIE x8/x4/x4.” So this motherboard adds one extra slot.

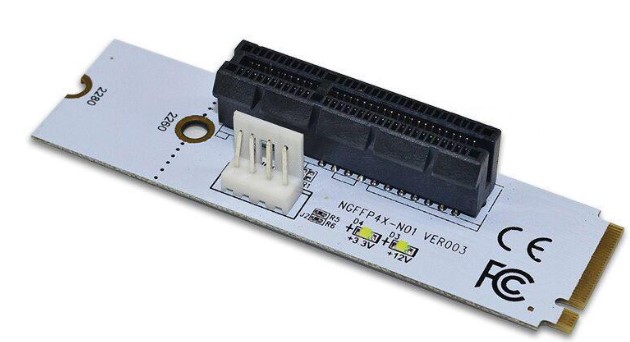

If you’re wondering what that looks like, there are multiple options, but one easy option is a bifurcation riser card, such as this (you can get them in 2, 3, or 4 slot):

There are also riser cards for M.2 to PCIe x4

Enter the CPU.

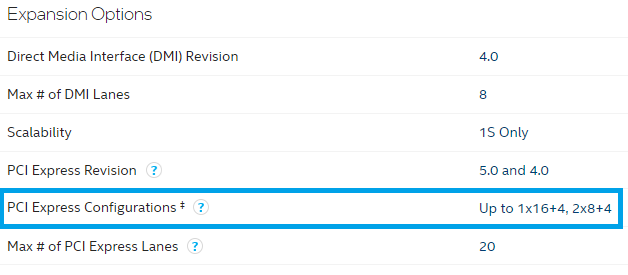

To this point we’ve covered what bifurcation of PCIe lanes is, risers to add the cards to the slots, and how different motherboards handle them. Now we have to check the CPU because even though the motherboard may offer x8/x8, x8/x4/x4, or x4/x4/x4/x4, the CPU itself may not support it. Let’s see what Intel’s site for the CPU I want says (linked early in the article):

Well, that means the i7-12700k can only do x8/x8 bifurcation. That +4 is the M.2 directly connected to the CPU, which I’ll also use. Now that we know what the motherboard is capable of, as well as aligning with the CPU, here’s the placement of the Optane SSDs and how they’ll connect in each motherboard.

Asus:

- CPU

- PCIe – Optane 1

- PCIe – Optane 2

- M.2 – Optane 3

- Chipset

- M.2 – Optane 4

- M.2 – Optane 5

Gigabyte:

- CPU

- PCIe – Optane 1

- PCIe – Optane 2

- M.2 – Optane 3

- Chipset

- M.2 – Optane 4

- PCIe x1 – Optane 5

If you’re wondering why I chose the M.2 slots on the chipsets instead of the PCIe slots, it’s because those M.2 slots are listed as PCIe 4.0 on both motherboards, while the additional PCIe slots are 3.0. PCIe 4.0 supports up to 16 GT/s, while 3.0 is only 8 GT/s, or 1.97GB/s per lane and 985MB/s per lane, respectively. As you can probably deduce from 4.0 vs 3.0, 5.0 doubles bandwidth & throughput to 32 GT/s, or 3.94 GB/s per lane.

Confused yet? I sure was as I was learning this.

From a PCIe version perspective, the Asus has an advantage because it has two on 5.0 and three on 4.0, while the Gigabyte has four of them on 4.0, but leaves one lone Optane on a PCIe x1 3.0 slot. That sucks, I don’t want to do that. That being said, if you remember back to the beginning of this blog, the Optane 905P states the interface is PCIe 3.0 x4, essentially negating any advantages of using those two PCIe 5.0 slots. The specs of the SSD also list sequential read/write at 2500/2000 MB/s, respectively. Math says that the PCIe 3.0 standard can do 3940 MB/s using four lanes, but only 985MB/s for a single lane. Right now the Asus is the clear winner, especially since the Z690 has double the bandwidth on the DMI. The PCIe versioning is kind of moot, but not the number of lanes.

For what it’s worth, I could use the Asus motherboard without bifurcation, it would look like this:

- CPU:

- PCIe 5.0 – Optane 1

- M.2 PCIe 4.0 – Optane 2 via riser

- Chipset

- M.2 PCIe 4.0 – Optane 3 via riser

- PCIe 3.0 – Optane 4

- PCIe 3.0 – Optane 5

The 905P is PCIe 3.0 and won’t push the limits of that Gen 3 PCIe slot. However, that puts 60% of the available storage on the chipset, meaning it has to traverse the DMI. DMI v4 supports 16 GT/s (and I believe that’s per-lane), so with 8 DMI 4.0 lanes, I believe the available throughput is in the neighborhood of 15.72 GB/s. I seriously doubt I’d saturate that link, since the three Optanes could pull a theoretical maximum aggregate of only 7.5 GB/s. Personally, I still want the data as close to the CPU as possible.

Huge thanks to u/lithium3r in r/homelab, as they explained a lot of this to me to help me understand how it all works.

I thought I knew exactly what I wanted, but after this research, I’m kind of back to the drawing board. It is true that most AMD systems are more bifurcation friendly, but I’m looking for a proc with integrated graphics so I don’t have to burn a slot for that. I’ll probably still go Intel, but we’ll see in the next post…

In conclusion, I hope this article helps you understand what bifurcation is and how to determine what your specific motherboard & CPU combo can do.