If your ESXi host isn’t showing you the expected DCUI, and instead you’re seeing something like the following:Shutting down firmware service...

Using 'simple offset' UEFI RTS mapping policy

Relocating modules and starting up the kernel...

Don’t be alarmed, this is actually somewhat normal.

‘Somewhat’ meaning at some point someone likely ran esxcli system settings kernel set -s vga -v FALSE to allow GPU passthrough to be persistent after boot (more information on that in William Lam’s blog post and comments). Hi! It’s me! I’m the problem! It’s me! The last bit of ‘starting up the kernel’ is the key thing here saying we’re good to go. If all goes as planned, you should be able to still ping the host, as well as log into the host UI.

I have a four node home lab and pass a GPU through to a specific VM, but the PCIe Passthrough was not persistent whenever I’d shut my lab down, so I disabled VGA to allow the VM to take control of the GPU. Otherwise, ESXi takes control to use it for the DCUI. This is where I had an issue, as the machine with the GPU was offline and would not ping. I had a slight panic attack when I saw that blank screen saying scary words, but fear not, we can get back to the DCUI!

How do I revert and get back to my DCUI?

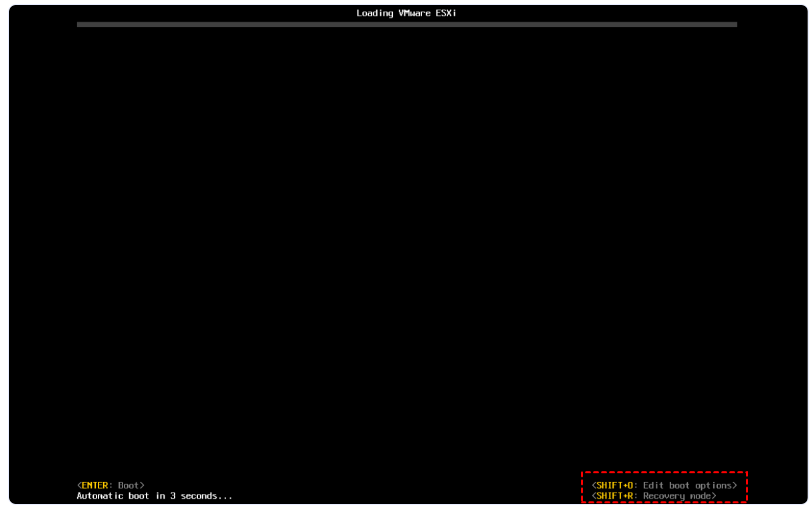

This is actually pretty simple. When you’re at the boot.cfg screen that has a 5 second countdown, quickly hit Shift+O (that’s the letter o, as in boot Options):

This gives you the ability to add to the boot.cfg options, simply add vga=TRUE to the end of the line and hit Enter to get back to your DCUI screen again.

Happy labbing!!!