I’m sure many of you know VSAN’s Failures To Tolerate, or FTT, is something that adds overhead to both your cluster & your data. It’s no secret FTT of 1 doubles your data, think of it as N+1 copies of your data. You could essentially have two, three, or four copies of your data, redundancy is a good thing!

When you look at the cluster side of it, there is another ‘gotcha’. The host needs becomes 2N+1. Let’s look at FTT of one, that’s saying you need 2(1)+1 hosts, so 2+1 = 3. And of course, FTT2 requires 5 hosts, and FTT3 requires 7.

What’s the problem?

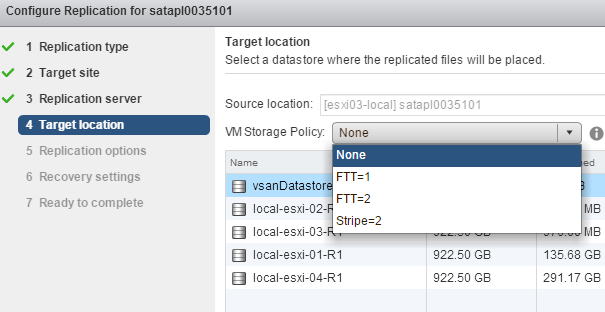

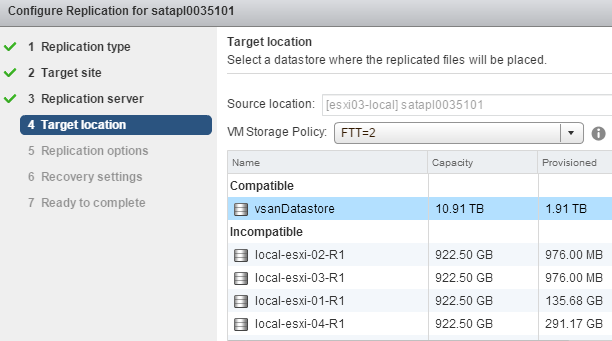

Let’s step through it! In the web client (only place you can configure vSphere Replication 5.8, #sadface), you can choose whatever policy is available at the target site and the wizard will show compliant datastores. Take a look at this:

Notice something odd? As you can guess, I have only four nodes in the cluster by the four local datastores. I’ve selected FTT=2 and it says compliant, but we all know it’s not, LIAR! :P If you complete the wizard, vSphere Replication will actually complete successfully:

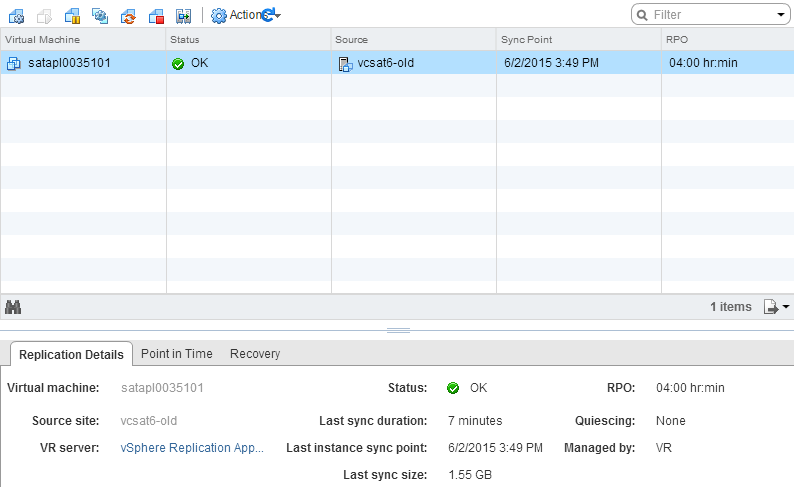

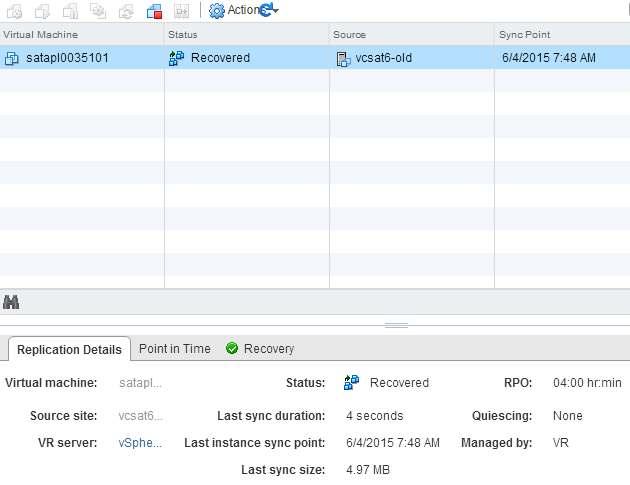

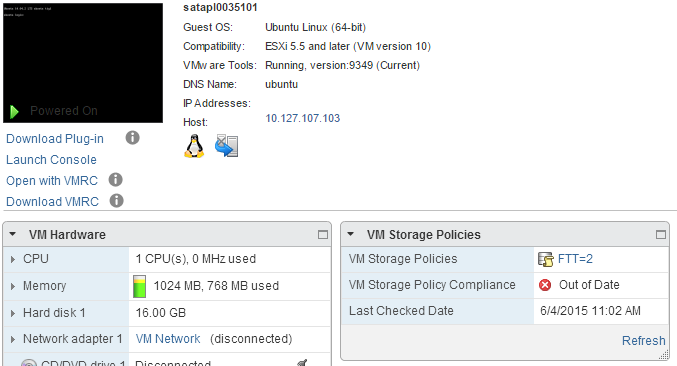

You can even recover that VM:

There is a problem!

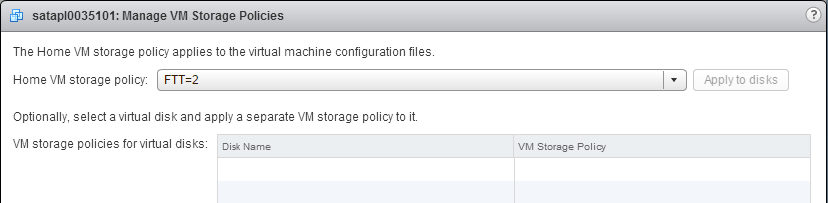

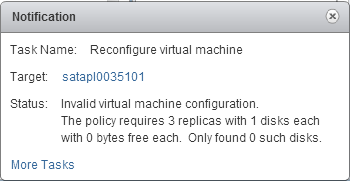

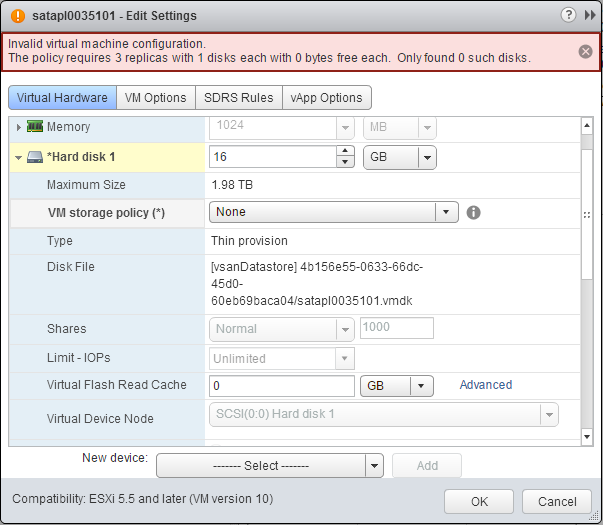

Say you do like I did here and assign FTT=2 policy to a VM and replicate it to a VSAN datastore that can’t meet the policy. You can recover it, but it’ll keep the FTT=2 policy and not be compliant. The problem is you can’t change it after the fact. I tried and it failed:

I tried setting it to no policy at all and it still failed:

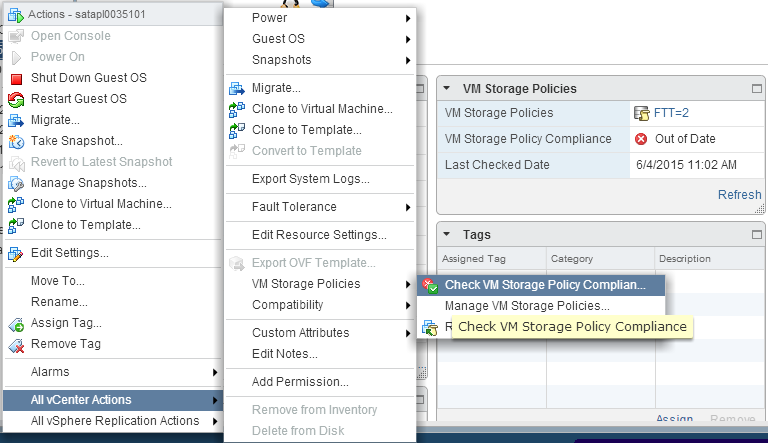

I tried forcing it to check compliance, but nothing ever happened:

It would never update, it just showed ‘Out of Date’:

There may have been ways to fix it, but I simply deleted this target VM, re-enabled replication for the original source with FTT=1 and recovered it again.

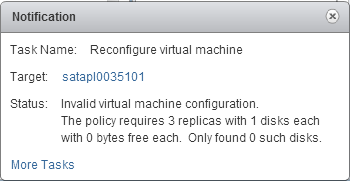

However, if you’re using SRM with vSphere Replication, protecting the VM will actually still work. What’s funny, though, is if you run a test failover, it’ll fail with this error:

Now make sure you DO NOT run a recovery, you’ll get a similar error:

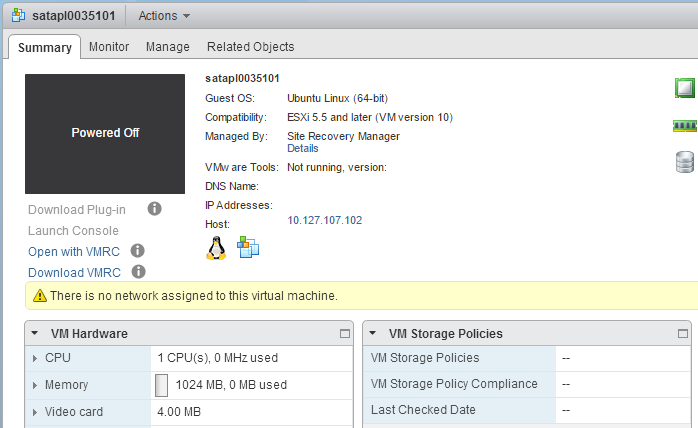

I checked the placeholder beforehand and since there aren’t any disks, it doesn’t seem to care:

So I tried to manually assign a storage policy, that’s where I saw no disks:

If you run a test, you can easily click clean up, change your FTT level in vSphere Replication, then run another test and maybe a recovery. However, if you did run a recovery, you’ll be kind of stuck. You can’t reconfigure vSphere Replication because the storage steps have already been done. You can go change the Storage Policy on the VM, but when you run the recovery again, it tries to force the original Storage Policy of FTT=2 and will fail.

The fix in case you did run a recovery

I tested this and, without wanting to go in and do brain surgery, I wanted to find a quick way to fix it. There wasn’t, though. Here’s what I did:

I stopped vSphere Replication, deleted the protection group, deleted the recovery plan, then had to unregister & reregister the original source VM in order to re-setup vSphere Replication.

***UPDATE June 10, 2015***

As Jeff points out below, a Storage vMotion to a different datastore, then back to your VSAN datastore, will allow you to assign a new Storage Policy that is compliant.

What’s your point?

Here’s what you need watch out for: When setting up vSphere Replication, Don’t apply a VSAN storage policy that exceeds the abilities of your target VSAN cluster. It will allow you to specify a higher FTT than the cluster can support. Of course, you have to create that storage policy to make it available for vSphere Replication, so chances are you won’t, unless you’re testing.

The real problem is vSphere Replication will let you specify a storage policy and not really check to see if it’s truly compliant. SRM doesn’t either when creating the placeholder.

Sorry for the super long post, but this took lots of testing and screenshots & validation to make sure I was reporting good info :)

Hi Luke. Interesting and well documented article. I think the key point is “you have to create that storage policy to make it available for vSphere Replication, so chances are you won’t, unless you’re testing.” You are correct in stating that vSphere Replication and SRM do not verify that a storage policy can actually be supported by the datastore.

I did some quick testing with VSAN 6.0 and vSphere Replication 6.0 and found results that varied somewhat from your experience with 5.x. First, the vSphere Replication UI will differentiate compatible and incompatible datastores based on the storage policy selected. However, you can still select an incompatible datastore and finish the replication configuration. vSphere Replication will replicate to the target datastore with no issues. I was also able to recover and power on the VM successfully in spite of the incompatible policy (I too configured FTT=2 with a 4-node VSAN datastore). Once the VM was recovered, I was not able to change the storage policy. Workaround: Storage vMotion to another datastore selecting a compatible policy for that datastore, then Storage vMotion back to the VSAN datastore selecting a compatible VSAN storage policy. Thank you for making readers aware of this potential pitfall. I am looking into having a VMware KB article published around this topic.

Thanks Jeff! You’re right, chances are no one will actually end up in this situation. I didn’t test a storage vMotion, so that’s good to know.