Since deploying the Nexus 1000v, it set our slot sizes in the cluster to 1.5GHz and 2GB of RAM. Not wanting to waste slots in our cluster by guests that may not reach that size (or partially fill slots), I wanted to carve out the cluster into slots of a lesser size, similar to using smaller block sizes on a drive to maximize space.

Using percentage reservations with vSphere, you can get by the slot sizes, but what if you’re starting a small cluster and growing it as resources are needed? How could I carve 25% out of a 2-node cluster? Sure, you can do it, but if you’re operating at the full 75% (with 25% reserved for failover) and lose a host, you actually don’t have enough resources and are over-committed by 25%.

Setting the following settings will help reduce your slot size, but may also have a negative impact by not having enough reservations if you end up in a failover state.

The three I’m setting are:

- das.slotCpuInMhz – This is the smallest ‘block’ of CPU resources to call a slot, I set mine to 500mhz. If you have a cluster that has 44GHz available, this would give you 88 total slots (will be less, due to host reservations, etc). If it’s a simple 2-node cluster with one host reserved for failover, you’d have 44 usable slots (again, actual value will be less). If you don’t change the slot sizes and have a guest with a CPU reservation of 1.5GHz, your slot size defaults to 1500mhz and gives you something like 14, even less actual usable slots.

- das.slotMemInMB – Same thing applies here as above, which I set mine to 512MB. Chances are I’ll never go lower than that, but setting a guest to 1.5GB of RAM will use 3 slots, instead of 1 2GB slot with the Nexus’ RAM reservation, then essentially wasting 512mb.

- das.slotNumVCpus – If you have some multiple CPU systems, this can be useful because this is set to the highest setting needed. Say you’re testing a server and give it 4 vCPUs, your slot size is now 4 vCPUs. Even if you have 99 guests and one is set to 4 vCPUs, the slot is set to that 4 vCPUs and you basically have 75% waste. I set mine to 1, which essentially ignores the vCPU count for slot sizes.

For a more in-depth explanation of this, take a look at this post by Duncan at YellowBricks.

**UPDATE August 15th, 2012**

This older post needed some updating. The percentages I pointed out above are misleading. Yes, they do carve out cluster resources to reserve for failover capacity, but if none of your VMs have reservations, it subtracts a very small amount from your total resources. The percentage is based on Reservations Only (plus memory overhead, which is minimal), so if you have zero reservations, only memory overhead gets subtracted from the 100% of the cluster memory. This is true for CPU, too, but if there isn’t a CPU reservation, it defaults to 32MHz (256MHz for 4.0 & 4.1).

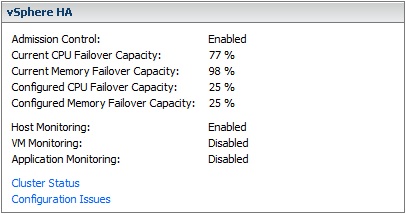

Lets take a look at this:

It shows my current memory failover capacity at 98%, thus saying I’m using only 2%. This is a 10-node cluster with 241 VMs and 2TB of physical RAM. I’ve got almost 1TB provisioned (about 1009GB), so that’s almost 50%, why is it only showing I’m using 2%? It’s because the percentage is based off of reservations, like I said in the paragraph above. By default, the memory reservation is 0, so it only takes into account memory overhead (minimal). It takes the total memory, subtracts the memory reservations & overhead, then divides by total again to get used percentage. If I set reservations matching provisioned RAM, it would be like this: (2048GB – 1009GB) / 2048GB = 0.50732421875, or 50.73%. Since I don’t have any reservations, it’s like this: (2048 – 41) / 2048 = 0.97998046875, or 98%. I used 41GB for overhead because I estimated 2% of usage of the 2TB of RAM, which would equate to an average overhead of 174.21MB each.

The ONLY way the percentage-based admission control is really accurate is if 100% of your VMs have reservations.

I still use host failures, and generally set the number near 20-25% (2 nodes for a 10-node cluster). I’ve recently only been using das.slotNumVCpus and setting it to 1 because the other two (das.slotCpuInMhz and das.slotMemInMB) tend to be less than what I had set them at (500 & 512, respectively). The only cluster where I use das.slotCpuInMhz and das.slotMemInMB is where my Nexus 1000v resides, because it has reservations that I want to chop up.

Don’t forget to look at your Advanced Runtime Info for your cluster to choose the right settings for you.

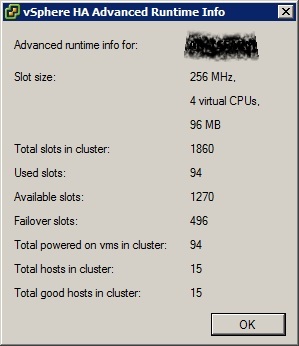

Here is my 15-node cluster with 4-host reservation and no advanced settings:

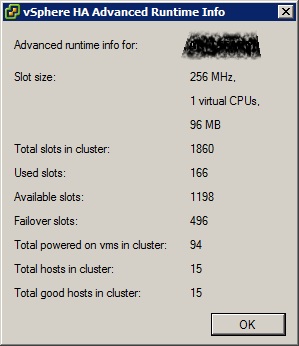

Now look at this one where I set das.slotNumVCpus to 1 (notice the total, used, and available slots):

I might be better off leaving the advanced settings off, but if you have reservations (as originally stated above), they’re still useful if you need them.